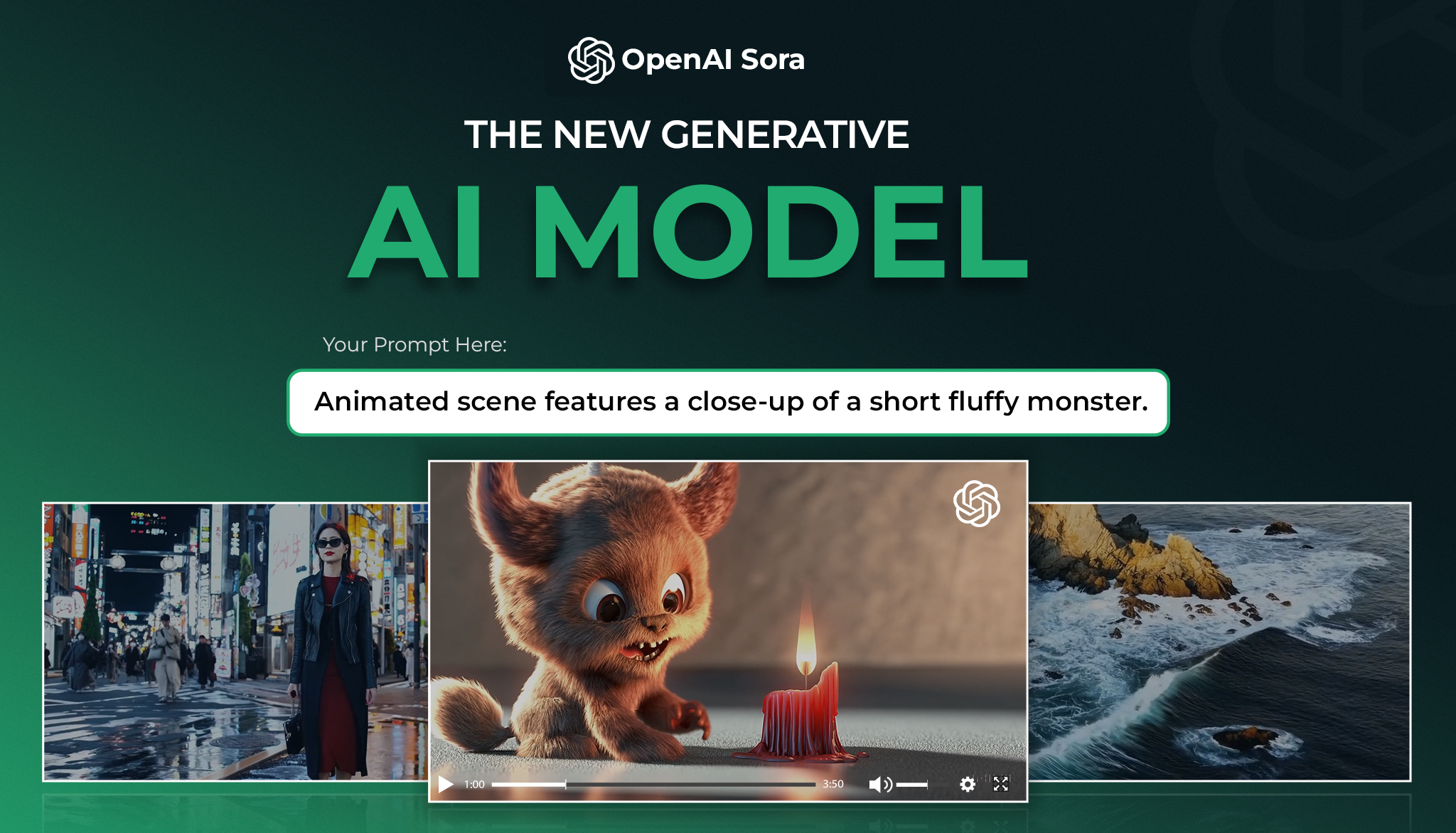

OpenAI Sora: The New Generative AI Model

In the continuously growing world of artificial intelligence, OpenAI, the creators of ChatGPT, the pioneer in AI technology, continue to push the limits of what’s possible with AI. One of their latest creations is OpenAI Sora. It is a sophisticated text-to-video model that has captured the attention of creators all over the globe. Let’s look into what makes Sora so remarkable:

What is Sora?

What can Sora Do?

- Realistic Scene Generation: OpenAI Sora can bring text instructions to life by generating realistic and imaginative scenes.

- Length and Quality: It produces videos up to one minute in length while maintaining exceptional visual quality and adhering closely to the user’s prompt. It can generate videos with resolutions of up to 1920×1080 or 1080×1920, ensuring stunning visual clarity.

- Complexity: Sora isn’t limited to simple scenes. It can create complex environments with multiple characters and camera angles, specific types of motion, and even accurate background details to bring life to your ideas.

- Temporal Extension: Beyond creating scenes from scratch, Sora can even extend existing videos both forward and backward in time, this feature of Sora opens a whole new way we look at content creation and allows for new possibilities for storytelling and editing.

Prompt: A stylish woman walks down a Tokyo street filled with warm glowing neon and animated city signage. She wears a black leather jacket, a long red dress, and black boots, and carries a black purse. She wears sunglasses and red lipstick. She walks confidently and casually. The street is damp and reflective, creating a mirror effect of the colorful lights. Many pedestrians walk about.

How it works:

Open AI Sora is a powerful AI visual content generator, it operates through a seamless mix of both natural language understanding and visual synthesis to become the most sophisticated AI visual generator.

Here’s how it works:

- Text Input:

- You can provide a text prompt describing the scene or scenario you want to see in the video.

- The prompt can be as simple as a sentence or more detailed instructions.

- Language Understanding:

- Sora’s model then processes the text input, extracting relevant information.

- It understands details like characters, settings, actions, and emotions described in the prompt.

- Visual Synthesis:

- Based on the language input, Sora generates a sequence of visual frames.

- These frames depict the scene, characters, and actions described in the text.

- The model ensures consistency throughout the video.

- Scene Composition:

- Sora constructs the video by arranging these frames in a logical order.

- It considers camera angles, lighting, and motion to create a visually pleasing result.

- Quality Control:

- Sora aims for realism and creativity.

- It balances the fine line between adhering to the prompt and adding artistic flair.

- The model ensures that the generated video aligns with the user’s intent.

- Rendering:

- Once the frames are composed, Sora renders the video.

- The final output is a seamless video that brings the text prompt to life.

Prompt: Beautiful, snowy Tokyo city is bustling. The camera moves through the bustling city street, following several people enjoying the beautiful snowy weather and shopping at nearby stalls. Gorgeous sakura petals are flying through the wind along with snowflakes.

Strengths and Weaknesses:

Strengths:

- Scene Generation: Sora can bring text instructions to life by generating complex scenes with multiple characters, specific types of motion, and accurate background details.

- Emotional Expression: The model has a deep understanding of language, enabling it to accurately interpret prompts and create compelling characters that express vibrant emotions.

- Multi-shot Videos: Sora can generate multiple shots within a single video, ensuring a visually rich and dynamic experience for viewers.

Weakness:

While Sora excels in many areas, it does have its limitations:

- Physics Simulation: The model may struggle with accurately simulating the physics of complex scenes, leading to discrepancies in the generated videos.

- Spatial Details: Sora may occasionally confuse spatial details in the prompt, such as left and right orientations.

- Temporal Understanding: Precise descriptions of events that unfold over time, like following a specific camera trajectory, may pose challenges for the model.

Ensuring Safety and Reliability: OpenAI is taking proactive steps to ensure the safety and reliability of Sora:

Testing: OpenAI is committed to ensuring the safety and reliability of Sora through rigorous testing, so when the final version is available to the masses it is stable and reliable.

Misleading Content Detection: Tools are being developed to detect misleading content generated by Sora, ensuring transparency and accountability.

Usage Policies: Strict usage policies are in place to prevent the generation of harmful or inappropriate content, safeguarding against misuse

Research Techniques:

Sora employs advanced research techniques to achieve its impressive capabilities:

Diffusion Model: Sora generates videos by gradually transforming noise into coherent visuals over many steps, a process known as diffusion.

Transformer Architecture: Similar to GPT models, Sora utilizes a transformer architecture for superior scaling performance.

Recaptioning Technique: Borrowing from DALL·E 3, Sora uses a recaptioning technique (The prompt provided by the user is further expanded upon using the captioning model) to generate descriptive captions for visual training data, enhancing its ability to follow user instructions with extreme accuracy.